I once had a conversation with a tester that went something like this -

"We need the automation to be able to recover from errors. I don't know what those errors will be, or what steps will need to be performed to address those errors, but the upshot is that the automation needs to get the AUT back into a good state before continuing."

I explained that in order to build this kind of logic, at least some knowledge was needed of what constituted an error, and how the error was to be addressed. They rather heatedly told me that if the automation couldn't "just know" that it was not going to be helpful.

Here's the thing. We need to set reasonable expectations for test automation. Automation can make testing easier, and can speed up tedious processes. However, automation is not some magical, omniscient force that can interpolate how your application works and how to best recover from problems. If it could, we wouldn't need testers or developers - all our software would write and test itself. Anyone who tells you that automated tests will just magically resolve themselves with no direction on your part (they might be cute and use the word "automagically" - watch out for those people) is someone who's selling something, or is clueless.

Automation is a tool. It's not magic. It's not smarter than you are. It's only as smart as you make it, and it can only do what you tell it. Your automation can never, and will never "just know" how to test your application. The computer is a stupid box. It's just fast. If you don't tell it what to do, it will do nothing, although it will do nothing very quickly.

In short, automation is not magic, it's more like alchemy. It can be complicated at times, but the end result of making the testing process smoother/easier/faster is akin to turning lead into gold.

Monday, August 30, 2010

Friday, August 20, 2010

The Julia Child Approach to Demos

When you're giving live demos, it's always handy to have everything you need for that demo ready to go. Have powerpoint already loaded, have the application you're demo-ing already loaded, and have anything else ready to go, too. If you'll need to show a calculation, have Calculator already running. If you'll need to write some code in Visual Studio, have that already running & open to a blank project. The idea here is that your audience should never have to watch a splash screen or "Loading" dialog. Before I run a demo, I launch all the apps I need, and organize them on the Windows task bar so that they're in the order that I'll use them. Then I just click right through the task bar as I go from one activity to the next.

Additionally, if you're going to demo any feature that takes longer than 10 seconds to complete, consider having a "before & after" of that feature, where you walk through the steps needed to perform the action (the before), and then have a finished version of the feature ready to show off (the after).

(A note here - always tell your audience about how much time the operation should take to complete. You don't want to misrepresent how fast your application works, that's a sure-fire way to lose credibility in your customers' eyes. I usually say something like "This operation usually takes about 5 minutes to complete, so instead of making you stare at my hourglass for that much time, I've already got a finished sample right here")

I call this the Julia Child approach, because I was first exposed to it while watching Julia Child's cooking show back when I was a kid. Julia would have all her ingredients and utensils ready, so she'd smoothly go from one prep task to the next. For example, if she needed to chop some tomatoes, she already had the tomatoes washed, and there was a cutting board and a knife right there. You didn't need to watch her hunt around for anything, she had it ready to go. Then she'd walk you through all the steps to make a cake, then put that cake in the oven. Then she'd move over to a second oven, and remove a cake that had finished cooking. It was a great way to show both the preparation and the finished product, without needing a lot of filler in the presentation.

So having all the pieces of your demo ready to go will make the presentation go smoother, and having a "before & after" prevents awkward lapses in the presentation. All in all, this approach helps ensure a clean, smooth presentation that your customers will eat up. Bon Appetit!

Additionally, if you're going to demo any feature that takes longer than 10 seconds to complete, consider having a "before & after" of that feature, where you walk through the steps needed to perform the action (the before), and then have a finished version of the feature ready to show off (the after).

(A note here - always tell your audience about how much time the operation should take to complete. You don't want to misrepresent how fast your application works, that's a sure-fire way to lose credibility in your customers' eyes. I usually say something like "This operation usually takes about 5 minutes to complete, so instead of making you stare at my hourglass for that much time, I've already got a finished sample right here")

I call this the Julia Child approach, because I was first exposed to it while watching Julia Child's cooking show back when I was a kid. Julia would have all her ingredients and utensils ready, so she'd smoothly go from one prep task to the next. For example, if she needed to chop some tomatoes, she already had the tomatoes washed, and there was a cutting board and a knife right there. You didn't need to watch her hunt around for anything, she had it ready to go. Then she'd walk you through all the steps to make a cake, then put that cake in the oven. Then she'd move over to a second oven, and remove a cake that had finished cooking. It was a great way to show both the preparation and the finished product, without needing a lot of filler in the presentation.

So having all the pieces of your demo ready to go will make the presentation go smoother, and having a "before & after" prevents awkward lapses in the presentation. All in all, this approach helps ensure a clean, smooth presentation that your customers will eat up. Bon Appetit!

Friday, August 13, 2010

Automate in Straight Lines

Everyone's heard the adage "the shortest distance between two points is a straight line." This holds true in test automation as well. I recently spoke with someone who was struggling to get a UI automation tool to run against an application. This lady had no experience with test automation before, and the application was not "automation friendly." As such, she had spent almost an entire week struggling to get tests running.

I spoke with her on the phone for about 30 minutes, and during the course of the conversation, the intent of what she was doing came out. She was not testing the software, she was trying to populate a database with information, and this database would then be used for training purposes. She'd need to repopulate the db each time there was a class to teach. This GUI automation scenario she was trying to create would take hours to run, but she hoped she could start it on the night before a class and then have it be ready in the morning.

I asked her why she didn't just write a plain old SQL script that would populate the db, then create a backup of the database and restore it each time she needed to teach a class. There was silence on the other end of the line.

Look for the simple solutions. Databases can be written to directly, and then restored, over and over again. Webservices can be manipulated directly, methods can be called from dlls directly, the Windows Registry can be manipulated directly. If your intent is just to get data from point A to point B, do it in a straight line via script. Adding other layers of complexity (UIs, GUI tools, etc) is kind of like trying to go from New York City to Boston by way of Los Angeles.

I spoke with her on the phone for about 30 minutes, and during the course of the conversation, the intent of what she was doing came out. She was not testing the software, she was trying to populate a database with information, and this database would then be used for training purposes. She'd need to repopulate the db each time there was a class to teach. This GUI automation scenario she was trying to create would take hours to run, but she hoped she could start it on the night before a class and then have it be ready in the morning.

I asked her why she didn't just write a plain old SQL script that would populate the db, then create a backup of the database and restore it each time she needed to teach a class. There was silence on the other end of the line.

Look for the simple solutions. Databases can be written to directly, and then restored, over and over again. Webservices can be manipulated directly, methods can be called from dlls directly, the Windows Registry can be manipulated directly. If your intent is just to get data from point A to point B, do it in a straight line via script. Adding other layers of complexity (UIs, GUI tools, etc) is kind of like trying to go from New York City to Boston by way of Los Angeles.

Monday, June 14, 2010

MS Office Automation

I've worked with a lot of people who have wanted to automate MS Office apps. Sometimes they're trying to just write results data out to a Word doc, other times, their company builds a plug-in to an Office app and they're trying to create automated tests for that plug-in. And they all start out by pointing a commercial record & playback tool at the app and clicking that red "record" button. At best, this results in tests that click on obscurely named objects, or at worst, tests that are driven exclusively by X/Y coordinates.

You see, MS Office apps (Word, Powerpoint Excel, Outlook) are not record & playback friendly. I learned this the hard way a long time ago, and I'm hoping to spare others the same pain. You will not be able to create a reliable, robust set of MS Office tests via a record and playback tool. The only way I've found to effectively work with Office apps is via scripting. The Office object model lets you programmatically access any bit of text, cell or slide. For example, this code launches Word, opens a new document, and writes Hello:

Set objWord = CreateObject("Word.Application")

objWord.Visible = True

Set objDoc = objWord.Documents.Add()

objDoc.Selection.TypeText("Hello")

If you try to do those same actions via record & replay, your results will be spotty at best. So when you need to work with Office apps, automate them via their object model. A little known fact is that MS ships help files with office that describe each app's object model, along with coding examples. The location of these files is [OfficeInstallDirectory]\[OfficeVersion]\1033

The app's help files are:

Keep these handy next time you're doing MS Office automation, and your automation efforts will be much more successful.

You see, MS Office apps (Word, Powerpoint Excel, Outlook) are not record & playback friendly. I learned this the hard way a long time ago, and I'm hoping to spare others the same pain. You will not be able to create a reliable, robust set of MS Office tests via a record and playback tool. The only way I've found to effectively work with Office apps is via scripting. The Office object model lets you programmatically access any bit of text, cell or slide. For example, this code launches Word, opens a new document, and writes Hello:

Set objWord = CreateObject("Word.Application")

objWord.Visible = True

Set objDoc = objWord.Documents.Add()

objDoc.Selection.TypeText("Hello")

If you try to do those same actions via record & replay, your results will be spotty at best. So when you need to work with Office apps, automate them via their object model. A little known fact is that MS ships help files with office that describe each app's object model, along with coding examples. The location of these files is [OfficeInstallDirectory]\[OfficeVersion]\1033

The app's help files are:

- Word - VBAWD10.chm

- Excel -VBAXL10.chm

- Powerpoint - VBAPP10.chm

Keep these handy next time you're doing MS Office automation, and your automation efforts will be much more successful.

Friday, June 11, 2010

Silver Bullets and Snake Oil

After doing test automation for most of my professional life, I'm really tired of vendors claiming that automation is a silver bullet. I'm also tired of test managers claiming that automation is snake oil. Here's the thing. The silver bullet pitch ("Automate all tests with the click of a button; no coding knowledge or original thought needed") is snake oil. I've said before on this blog that if you want to automate, you need to learn how to write script. I still hold by that. So it's the vendors' claims that are the problem, not automation itself.

Let's look at this using a different example - the microwave oven. The little cookbook that came with my microwave claims the microwave can cook any food just as good as the traditional methods. But that's really not true. While the microwave can cook just about everything, the food comes out different. Chicken comes out rubbery, for example. The button marked Popcorn cooks popcorn for too long, and often burns it. There's a recipe for cooking a small turkey, but I'm not courageous enough to try that.

Now, the cookbook is making a silver bullet pitch. "The microwave cooks everything just as good as traditional means" And, based on my experience, that's not the case. However, does that mean that I should throw the microwave away, and denounce all microwave ovens as worthless? No. It means I still use the regular oven to cook chicken, and I manually key in how long to cook the popcorn. In short, I adapt the microwave to my needs and use it for what it does well. I still have a grill, a deep fryer, an oven and a stovetop. I microwave what's appropriate for me to microwave and that's it.

Same thing for automation. I would never recommend (or even try) to automate 100% of your tests. I would recommend trying to automate tasks that are difficult or impossible to perform by hand. I would recommend automating "prep" tasks, like loading a database, building strings for use in boundary testing. I would recommend using tools to automatically parse log files for errors, rather than trying to read them by hand. The application of automation is the important thing here; you need to be smart about what makes sense to automate, just like you need to be smart about what you try to cook in the microwave.

Silver bullets are for werewolves. Snake oil is for 19th century hucksters. Automation is neither. Automation is for testers and developers who want to put in some effort to speed up their existing processes. It's just a tool. That's all.

Let's look at this using a different example - the microwave oven. The little cookbook that came with my microwave claims the microwave can cook any food just as good as the traditional methods. But that's really not true. While the microwave can cook just about everything, the food comes out different. Chicken comes out rubbery, for example. The button marked Popcorn cooks popcorn for too long, and often burns it. There's a recipe for cooking a small turkey, but I'm not courageous enough to try that.

Now, the cookbook is making a silver bullet pitch. "The microwave cooks everything just as good as traditional means" And, based on my experience, that's not the case. However, does that mean that I should throw the microwave away, and denounce all microwave ovens as worthless? No. It means I still use the regular oven to cook chicken, and I manually key in how long to cook the popcorn. In short, I adapt the microwave to my needs and use it for what it does well. I still have a grill, a deep fryer, an oven and a stovetop. I microwave what's appropriate for me to microwave and that's it.

Same thing for automation. I would never recommend (or even try) to automate 100% of your tests. I would recommend trying to automate tasks that are difficult or impossible to perform by hand. I would recommend automating "prep" tasks, like loading a database, building strings for use in boundary testing. I would recommend using tools to automatically parse log files for errors, rather than trying to read them by hand. The application of automation is the important thing here; you need to be smart about what makes sense to automate, just like you need to be smart about what you try to cook in the microwave.

Silver bullets are for werewolves. Snake oil is for 19th century hucksters. Automation is neither. Automation is for testers and developers who want to put in some effort to speed up their existing processes. It's just a tool. That's all.

Wednesday, June 9, 2010

Ethics in Automation

Automation really shines when it's used to speed up a process or to address some tedious task. Unfortunately, some less than scrupulous individuals have asked me to do some things with automation in the past that are just flat out wrong. Case in point: someone once asked me to create an automated test to search on google, and then perform click throughs on a competitor's adwords. The theory was that, since the competitor had to pay each time that link was clicked, it would cost the competitor a ton of money. I'm pretty sure that Google has some sort of mechanism set up to prevent click fraud, but the fact that someone would stoop to measures like this really pissed me off. I told that person no. Firmly.

Another time, someone else asked me to write a script that would artificially inflate the number of times a particular page had been viewed. He felt that if people saw the page had a high number of views, it would seem "more interesting" to other viewers and they'd focus on it. Again, I said no. Firmly.

I don't mean to go high-and-mighty, or holier-than-thou, but the fact of the matter is that unscrupulous business practices shouldn't be performed in the first place, and they sure as hell shouldn't be automated.

Always make sure to use your automation powers for good, not evil.

And now I'll get off my soapbox.

Another time, someone else asked me to write a script that would artificially inflate the number of times a particular page had been viewed. He felt that if people saw the page had a high number of views, it would seem "more interesting" to other viewers and they'd focus on it. Again, I said no. Firmly.

I don't mean to go high-and-mighty, or holier-than-thou, but the fact of the matter is that unscrupulous business practices shouldn't be performed in the first place, and they sure as hell shouldn't be automated.

Always make sure to use your automation powers for good, not evil.

And now I'll get off my soapbox.

Thursday, June 3, 2010

Cloud vs Traditional: Which Load Test Approach is Better?

Hi All

I'd like to get your opinions on cloud based load testing tools like LoadStorm and BrowserMob vs more traditional tools like LoadRunner and WebPerformance. What advantage do the cloud tools provide that the traditional ones don't, and vice versa?

Here's my background - I was taught how to do load testing with LoadRunner. My mentor said that you should always set up an isolated network and run tests in that environment to get your app's code running well. Once that's done, move the code to a staging/production environment and then generate load from outside the network against your production environment to see how the hardware responds.

Is this still the recommended way of doing things? If so, it seems that the cloud based tools are at a disadvantage because they can't test against an isolated network. Or, can the results generated by the cloud based tools easily identify where the performance bottlenecks are?

Please sound off in the comments.

Thanks

Nick

I'd like to get your opinions on cloud based load testing tools like LoadStorm and BrowserMob vs more traditional tools like LoadRunner and WebPerformance. What advantage do the cloud tools provide that the traditional ones don't, and vice versa?

Here's my background - I was taught how to do load testing with LoadRunner. My mentor said that you should always set up an isolated network and run tests in that environment to get your app's code running well. Once that's done, move the code to a staging/production environment and then generate load from outside the network against your production environment to see how the hardware responds.

Is this still the recommended way of doing things? If so, it seems that the cloud based tools are at a disadvantage because they can't test against an isolated network. Or, can the results generated by the cloud based tools easily identify where the performance bottlenecks are?

Please sound off in the comments.

Thanks

Nick

Thursday, May 27, 2010

Just Get On the Bike

There's an old story that goes something like this:

Little Timmy is very late to school one morning. It's 10:30, and the teacher looks out the classroom window and sees Timmy just getting into the schoolyard. He's pushing his bicycle and looks very hot and out of breath. When he gets into the classroom, the teacher says:

"Timmy, why are you so late to school?"

Timmy replies, "Sorry teacher, I had to push my bicycle all the way here."

The teacher, confused, says, "Why didn't you ride your bicycle?"

Timmy replies, "Teacher, I was running so late this morning that I didn't have time to get on the bike."

I hear this kind argument all the time when people put off automating. "I have too much to do," they say. "When this release settles down, I'll have time then."

Bullshit.

Folks, I hate to say it like this, but you're never going to have that perfect moment where you do nothing but automate. If you're a tester, then there's always something that will need testing. You have to find ways to work automation development in to your regular day. If automation is something that you throw to the back burner every time your workload heats up, you'll never get any benefits from automation.

Sometimes this means explaining to your manager that automation can't be a side project and should be focused on sooner rather than later. Sometimes it means recruiting a developer to help get you jumpstarted, so you can get a test framework built up quicker. Sometimes it means hiring another person whose primary responsibility will be test automation.

Point is, if you're always waiting for that perfect moment, you'll never get anything automated. So do *something*. Even if it's just a small test to start with, that's something you can build on. You won't get a comprehensive set of automated tests overnight; so get one test automated/one helpful tool written and then add to that. Even if you only automate one new test a week, over the course of a month, that's 4 tests you don't have to run by hand. Or, if you're trying to build a tool that will augment manual testing, get that to your testers as soon as it can do something useful, then continue adding features. The time savings will get more and more significant as you go along.

So, long story short, just get on the bike!

Little Timmy is very late to school one morning. It's 10:30, and the teacher looks out the classroom window and sees Timmy just getting into the schoolyard. He's pushing his bicycle and looks very hot and out of breath. When he gets into the classroom, the teacher says:

"Timmy, why are you so late to school?"

Timmy replies, "Sorry teacher, I had to push my bicycle all the way here."

The teacher, confused, says, "Why didn't you ride your bicycle?"

Timmy replies, "Teacher, I was running so late this morning that I didn't have time to get on the bike."

I hear this kind argument all the time when people put off automating. "I have too much to do," they say. "When this release settles down, I'll have time then."

Bullshit.

Folks, I hate to say it like this, but you're never going to have that perfect moment where you do nothing but automate. If you're a tester, then there's always something that will need testing. You have to find ways to work automation development in to your regular day. If automation is something that you throw to the back burner every time your workload heats up, you'll never get any benefits from automation.

Sometimes this means explaining to your manager that automation can't be a side project and should be focused on sooner rather than later. Sometimes it means recruiting a developer to help get you jumpstarted, so you can get a test framework built up quicker. Sometimes it means hiring another person whose primary responsibility will be test automation.

Point is, if you're always waiting for that perfect moment, you'll never get anything automated. So do *something*. Even if it's just a small test to start with, that's something you can build on. You won't get a comprehensive set of automated tests overnight; so get one test automated/one helpful tool written and then add to that. Even if you only automate one new test a week, over the course of a month, that's 4 tests you don't have to run by hand. Or, if you're trying to build a tool that will augment manual testing, get that to your testers as soon as it can do something useful, then continue adding features. The time savings will get more and more significant as you go along.

So, long story short, just get on the bike!

Monday, May 10, 2010

TUU: The Missing Metric

Before taking the plunge into test automation, many managers want to know how long until they see an ROI (return on investment). In the case of a commercial tool, some vendors will actually send out an Excel spreadsheet with formulas that let you plug in the amount you pay a tester per hour, and then the spreadsheet calculates how much it costs to find that bug during the test phase vs how much it costs to find it once the app has gone to the customer.

While I certainly understand the need to justify an expense, I think ROI by itself is the wrong way to look at test automation, because it puts the focus on dollars rather than on utility. If you buy a $9,000 automation tool, and pay your tester $40/hour, then using the ROI approach, your test automation tool needs to run 225 hours' worth of tests before your see an ROI. If you've bought multiple copies of that tool, then you need to multiply both the total cost and the total number of hours by the number of copies you purchased. So if you bought 5 copies of the test tool, that's $45,000 you spent, and you need to run 1,125 hours' worth of tests to break even. "That's too much time or money", someone says. "Look at a cheaper tool."

What I don't see people consider is how long it will take before the automation is doing something productive. So let's say that you're considering spending money on a commercial tool. Instead of saying "How long until I see an ROI", ask "How long until this lets me do something else?" Automation is implemented so that you don't have to do the same tasks over and over; you automate to get the computer doing regression tests so you can focus on other test activities. How long will it take you to create that first smoke test? How long until you are freed up to do the new tests? Let's say it takes you 2 work days to get your smoke test up and running. That means that two days after implementing automation, you start getting some of your time back. That's how long until the automation is doing something useful. I call this Time Until Useful (TUU).

I think that 16 hours until your automation is doing something useful is a lot more pallatable than 1,125 hours until your automation justifies its existence. Plus, now you're working and finding bugs that you didn't have time to find before. I've never seen that time savings or "new-bugs-found-during-exploratory-testing-while-the-automation-runs-a-smoketest" line item included on an ROI sheet.

If you're considering open source tools, TUU is much more a natural metric. Freed from cost concerns, a test team is given the chance to look at a bunch of tools and pick the one that's going to let them get the most done the fastest. I've seen teams where managers say 'oh, you're going open source?' and then promptly go deaf because they think that since there's no cost involved, they don't need to justify any expenses, therefore they don't care. Thing is, open source tools will have a bit of a learning curve as well, and you want to make sure that your team selects one that meets their needs and still has a good TUU. If the tool is free, but it takes 2 months before it's doing anything of value, is that worthwhile? No, you'd want them to look at a different open source tool that's going to do something sooner than that.

TUU is a metric that should be considered with the same weight (or maybe with greater weight) as ROI. Whether you're going commercial or open source, you should always consider how long it will be until the automation is doing something useful.

While I certainly understand the need to justify an expense, I think ROI by itself is the wrong way to look at test automation, because it puts the focus on dollars rather than on utility. If you buy a $9,000 automation tool, and pay your tester $40/hour, then using the ROI approach, your test automation tool needs to run 225 hours' worth of tests before your see an ROI. If you've bought multiple copies of that tool, then you need to multiply both the total cost and the total number of hours by the number of copies you purchased. So if you bought 5 copies of the test tool, that's $45,000 you spent, and you need to run 1,125 hours' worth of tests to break even. "That's too much time or money", someone says. "Look at a cheaper tool."

What I don't see people consider is how long it will take before the automation is doing something productive. So let's say that you're considering spending money on a commercial tool. Instead of saying "How long until I see an ROI", ask "How long until this lets me do something else?" Automation is implemented so that you don't have to do the same tasks over and over; you automate to get the computer doing regression tests so you can focus on other test activities. How long will it take you to create that first smoke test? How long until you are freed up to do the new tests? Let's say it takes you 2 work days to get your smoke test up and running. That means that two days after implementing automation, you start getting some of your time back. That's how long until the automation is doing something useful. I call this Time Until Useful (TUU).

I think that 16 hours until your automation is doing something useful is a lot more pallatable than 1,125 hours until your automation justifies its existence. Plus, now you're working and finding bugs that you didn't have time to find before. I've never seen that time savings or "new-bugs-found-during-exploratory-testing-while-the-automation-runs-a-smoketest" line item included on an ROI sheet.

If you're considering open source tools, TUU is much more a natural metric. Freed from cost concerns, a test team is given the chance to look at a bunch of tools and pick the one that's going to let them get the most done the fastest. I've seen teams where managers say 'oh, you're going open source?' and then promptly go deaf because they think that since there's no cost involved, they don't need to justify any expenses, therefore they don't care. Thing is, open source tools will have a bit of a learning curve as well, and you want to make sure that your team selects one that meets their needs and still has a good TUU. If the tool is free, but it takes 2 months before it's doing anything of value, is that worthwhile? No, you'd want them to look at a different open source tool that's going to do something sooner than that.

TUU is a metric that should be considered with the same weight (or maybe with greater weight) as ROI. Whether you're going commercial or open source, you should always consider how long it will be until the automation is doing something useful.

Friday, May 7, 2010

String Generator

If you've ever had to do boundary testing, you probably did what my co-workers and I used to do. You need a 400 character string, so you open MS Word, hold down the 'x' key for a few seconds, and then use the word count feature to see how many characters you've got. If you've got too many, you delete some. If you don't have enough, you mash 'x' for a few more seconds. Later, rinse and repeat until you've got it right.

Some folks would even remember to save those word files for future use, but more often than not we closed them without thinking about it. (This was usually followed by the comment "Ah, crap, I shoulda saved that...")

To help take some of the sting out of this, I wrote a string generator application. It lets users generate strings of predefined lengths, and then automatically puts those strings on the clipboard so that they can be pasted into fields.

I wanted an easy way to know that a field could accept that number of characters, so I always had the string end with a capital Z. For example, if you needed a 10 character string, it would generate aaaaaaaaaZ. Then you'd paste that into the desired field, and if you didn't see the 'Z', you knew the field was truncating the data that was entered.

The app I wrote looked like this:

Each of the buttons you see on the left would automatically generate a string of the specified length. If you needed a string of a different length, you could enter that length in the box next to the custom button.

The string generation itself was handled by this code:

Each button on the form had code like this:

And here's the code for the custom button:

Feel free to take this code and build your own string generator. (If the source code got truncated in the post, just go to View Source in your browser and you can copy and paste from there)

Enjoy!

Some folks would even remember to save those word files for future use, but more often than not we closed them without thinking about it. (This was usually followed by the comment "Ah, crap, I shoulda saved that...")

To help take some of the sting out of this, I wrote a string generator application. It lets users generate strings of predefined lengths, and then automatically puts those strings on the clipboard so that they can be pasted into fields.

I wanted an easy way to know that a field could accept that number of characters, so I always had the string end with a capital Z. For example, if you needed a 10 character string, it would generate aaaaaaaaaZ. Then you'd paste that into the desired field, and if you didn't see the 'Z', you knew the field was truncating the data that was entered.

The app I wrote looked like this:

Each of the buttons you see on the left would automatically generate a string of the specified length. If you needed a string of a different length, you could enter that length in the box next to the custom button.

The string generation itself was handled by this code:

private string prgGenerateString(int intLen)

{

StringBuilder MyStringBuilder = new StringBuilder();

MyStringBuilder.Capacity = intLen;

for (int i = 1;i<intlen;i++)

{

MyStringBuilder.Append("a");

}

MyStringBuilder.Append("Z");

return MyStringBuilder.ToString();

}

private void prgGenerateStatusMessage(int intLen)

{

lblStatus.Text = intLen.ToString() + " character string placed on clipboard.";

}

private void prgCreateStringAndPost(int intLen)

{

try

{

string txtValue = prgGenerateString(intLen);

txtPreview.Text = txtValue;

prgGenerateStatusMessage(intLen);

Clipboard.SetDataObject(txtValue);

}

catch(Exception except)

{

lblStatus.Text = except.Message;

}

}

Each button on the form had code like this:

private void cmd129_Click(object sender, System.EventArgs e)

{

prgCreateStringAndPost(129);

}

And here's the code for the custom button:

private void cmdCustom_Click(object sender, System.EventArgs e)

{

if (txtCustomLength.Text != "")

{

try

{

int val = Convert.ToInt32(txtCustomLength.Text);

prgCreateStringAndPost(val);

}

catch (Exception except)

{

lblStatus.Text = except.Message;

}

}

else

MessageBox.Show("Please enter a value in the box next to the Custom button");

}

Feel free to take this code and build your own string generator. (If the source code got truncated in the post, just go to View Source in your browser and you can copy and paste from there)

Enjoy!

Friday, April 30, 2010

Time Diffs

During one cycle of performance testing, the tool I was using only logged a test's start and end time. For some reason, it didn't log duration. So you'd see start time 10:34:23 and end time 10:39:00. Now, in the case of a short test, say, one that ran in 15 or 20 mins, that wasn't a big deal, it's easy to do that kind of math in your head. But when the durations were like this: start time 10:32:23 and the end time was 13:18:54, it would take a minute or so for me to calculate how long that was.

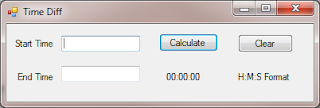

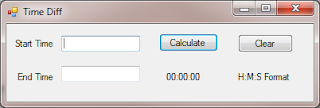

This was a frustrating experience, and one that was prone to human error. So I wrote a little C# app that allowed me to enter two times and see the difference between them. This let me note the test durations much faster and more precisely. To build your own time diff tool, fire up Visual Studio and create a form that looks like this:

For reference, I named the Start Time field txtStart, the End time field txtStop, the Calculate button is cmdCalculate, and the Clear button is cmdClear. I was lazy and left the label that you see as 00:00:00 as label1.

Now, use the following code for the calculate button:

Here's the code for the Clear button:

And that's it. You now have a little app that will calculate the differences in time for you. One thing to note here is that the app isn't intended to diff times that are greater than 24 hours, and you have to enter the times in a 24 hour clock format. So if your test ended at 2 pm, you'd enter 14:00:00.

This is a nice example of creating a tool that augments manual testing. It doesn't do any testing on its own, but it does let a tester perform a given activity faster and more efficiently. Always be looking for little opportunities like this, and your testers will thank you for them.

This was a frustrating experience, and one that was prone to human error. So I wrote a little C# app that allowed me to enter two times and see the difference between them. This let me note the test durations much faster and more precisely. To build your own time diff tool, fire up Visual Studio and create a form that looks like this:

For reference, I named the Start Time field txtStart, the End time field txtStop, the Calculate button is cmdCalculate, and the Clear button is cmdClear. I was lazy and left the label that you see as 00:00:00 as label1.

Now, use the following code for the calculate button:

private void cmdCalculate_Click(object sender, EventArgs e)

{

if ((txtStart.Text != "") && (txtStop.Text!=""))

{

DateTime start = DateTime.Parse(txtStart.Text);

DateTime stop = DateTime.Parse(txtStop.Text);

TimeSpan duration = new TimeSpan();

duration = stop - start;

label1.Text = duration.ToString();

}

}

Here's the code for the Clear button:

private void cmdClear_Click(object sender, EventArgs e)

{

txtStart.Text = "";

txtStop.Text = "";

label1.Text = "00:00:00";

}

And that's it. You now have a little app that will calculate the differences in time for you. One thing to note here is that the app isn't intended to diff times that are greater than 24 hours, and you have to enter the times in a 24 hour clock format. So if your test ended at 2 pm, you'd enter 14:00:00.

This is a nice example of creating a tool that augments manual testing. It doesn't do any testing on its own, but it does let a tester perform a given activity faster and more efficiently. Always be looking for little opportunities like this, and your testers will thank you for them.

Wednesday, April 28, 2010

Focus on Test Approach, Not Test Tools

There's a lot of news flying around right now about how powerpoint is making us stupid. Thing is, I don't think this is a powerpoint problem, I think it's a people problem. Slides probably aren't the best method to communicate this information, and that certainly needs to be remedied. So it's the approach that these presenters are taking that's the problem, not powerpoint itself.

This got me thinking about some of the negativity that comes up when the honeymoon period with a test automation tool ends. I've seen teams whose intent/dream/goal was to create UI automation tests via a record and playback tool. They envisioned doing a single recording and all their tests would then be able to run at the push of a button.

It's a nice dream, but in order to really get the most out of your automation efforts, you really need to know what you're doing. It's impossible to automate all your tests, and quite frankly, you probably don't need to. I once worked for a manager who insisted that all bugs have an automated regression test for them. Think about that for a minute. All bugs. Every one of them. Even the ones that were citing spelling mistakes.

Needless to say, that wasn't a good use of my time, and I'm pretty sure my blood pressure was in the near fatal range for a while there.

When you're thinking about automation, think about what makes the most sense to automate. Smoke test? Yep. Get that going and integrate it with your build process. Regression test? Yep, but don't go crazy. Focus on the primary workflows of your application, don't try to automate a test for every bug you've ever logged. That whizbang new functionality that Sally just rolled into the build? Probably not.

That whizbang new functionality probably should not be automated yet because it's UI will change, and most likely will change a lot over the course of the release cycle. Instead, use automation to augment manual and exploratory testing for this feature. Let's say that Sally's piece is a report generator that pulls data from a database. Build some automation that sets up different data in the db, allowing you to test more types of her reports more quickly. Maybe you can set up a batch file that calls out to a diff tool and compares a report from today's build to a baseline.

The approach you take will largely determine your automation effort's success. Don't assume that just because you bought a record-and-playback tool that you've got test automation covered. The test tool is a dumb computer program; you're the one with the brain. Know when to employ the tools you have, when to use something else, and your testing will go much smoother.

This got me thinking about some of the negativity that comes up when the honeymoon period with a test automation tool ends. I've seen teams whose intent/dream/goal was to create UI automation tests via a record and playback tool. They envisioned doing a single recording and all their tests would then be able to run at the push of a button.

It's a nice dream, but in order to really get the most out of your automation efforts, you really need to know what you're doing. It's impossible to automate all your tests, and quite frankly, you probably don't need to. I once worked for a manager who insisted that all bugs have an automated regression test for them. Think about that for a minute. All bugs. Every one of them. Even the ones that were citing spelling mistakes.

Needless to say, that wasn't a good use of my time, and I'm pretty sure my blood pressure was in the near fatal range for a while there.

When you're thinking about automation, think about what makes the most sense to automate. Smoke test? Yep. Get that going and integrate it with your build process. Regression test? Yep, but don't go crazy. Focus on the primary workflows of your application, don't try to automate a test for every bug you've ever logged. That whizbang new functionality that Sally just rolled into the build? Probably not.

That whizbang new functionality probably should not be automated yet because it's UI will change, and most likely will change a lot over the course of the release cycle. Instead, use automation to augment manual and exploratory testing for this feature. Let's say that Sally's piece is a report generator that pulls data from a database. Build some automation that sets up different data in the db, allowing you to test more types of her reports more quickly. Maybe you can set up a batch file that calls out to a diff tool and compares a report from today's build to a baseline.

The approach you take will largely determine your automation effort's success. Don't assume that just because you bought a record-and-playback tool that you've got test automation covered. The test tool is a dumb computer program; you're the one with the brain. Know when to employ the tools you have, when to use something else, and your testing will go much smoother.

Friday, April 9, 2010

What's in it for me?

As a presenter, that's the question you always have to be asking yourself. Imagine yourself in your audience's position - you are taking time out of your busy workday to view a demonstration. As such, you want to know how this new product or service will benefit you. When you first sit down to hear the presenter speak:

Yet it's amazing how many people start their presentations off with a bunch of slides that agonizingly detail those points. When I watch a demo, I want to know if a product is going to help me do my job effectively. Once I know that it will, then it might be nice to know more about the awards and company history. But that's not what's essential.

The essence of the demo should show the audience how your product will solve a problem they have. Before you present, make sure you understand the audience's pain. Are they performing a tedious task that is prone to errors? Are they trying to speed up their app's performance? Do they need a better way to communicate between mulitple departments?

Once you understand the problem, you can apply your product's features and show how those features turn into benefits for the audience. And that's the key - too many people will show a cool feature in their product, but not state how that feature benefits the audience. Always make sure you state the benefit - "Cool Feature X is important because it allows you to process your data 4x faster than before, which saves you a ton of time over the course of a release."

By tailoring your demos so you show how to solve your audience's problems, you'll be a much more effective presenter.

- You do not care about the presenter's company history.

- You do not care about the awards the presenter's company has won.

- You do not care about the demonstrated product's history.

Yet it's amazing how many people start their presentations off with a bunch of slides that agonizingly detail those points. When I watch a demo, I want to know if a product is going to help me do my job effectively. Once I know that it will, then it might be nice to know more about the awards and company history. But that's not what's essential.

The essence of the demo should show the audience how your product will solve a problem they have. Before you present, make sure you understand the audience's pain. Are they performing a tedious task that is prone to errors? Are they trying to speed up their app's performance? Do they need a better way to communicate between mulitple departments?

Once you understand the problem, you can apply your product's features and show how those features turn into benefits for the audience. And that's the key - too many people will show a cool feature in their product, but not state how that feature benefits the audience. Always make sure you state the benefit - "Cool Feature X is important because it allows you to process your data 4x faster than before, which saves you a ton of time over the course of a release."

By tailoring your demos so you show how to solve your audience's problems, you'll be a much more effective presenter.

Friday, March 26, 2010

The Power of the Mute Button

A big challenge any remote presenter faces is that it's too darned easy for an audience participant to tune out. You're already sitting in front of your computer, watching a demo, and then an important email shows up. Or an IM pops in. Or someone drops by your cube and wants to chat. So you start typing, or chatting, and if you haven't muted your phone line, that background noise is going to come through.

This is frustrating for the person presenting. Imagine if this scenario played out in a conference room. There, the presenter would politely ask to table the side discussion to later, or see if it was something he could help with. If it wasn't related to the item being presented, the people could step out of the room and not distract anyone. But when you're demoing remotely, this isn't an option. In that situation, you need to be able to mute the person remotely. If you can't, you're never going to get your message across.

Case in point:

A few months back, I attended a webinar that Microsoft was putting on about Visual Studio 2010. The presenter had to stop several times to ask people to mute their lines. Now here's the thing - if you're the person who's stopped paying attention to the call and is now typing, chatting, or playing video games, you're not going to notice the presenter asking people to mute. So he had to contend with a metric-crap ton of background noise. As an audience member, it was extremely frustrating to try and filter out the noise from the presenter's message, and to be honest, I can't remember much of what he talked about.

When I give remote demos, I have the ability to mute people remotely when stuff like this happens. The program I use (GoToMeeting) also has a chat window. So if there's a lot of background noise coming from a particular participant, I'll say "Ok, folks, it sounds like -insertPersonNameHere- has a really sensitive microphone that's picking up a lot of background noise. I'm going to mute them, but if they have questions, they'll be able to let me know via the chat window, and I'll unmute them."

So make sure you have the ability to mute people remotely. It will only make your presentations run smoother both for yourself, and for your audience.

This is frustrating for the person presenting. Imagine if this scenario played out in a conference room. There, the presenter would politely ask to table the side discussion to later, or see if it was something he could help with. If it wasn't related to the item being presented, the people could step out of the room and not distract anyone. But when you're demoing remotely, this isn't an option. In that situation, you need to be able to mute the person remotely. If you can't, you're never going to get your message across.

Case in point:

A few months back, I attended a webinar that Microsoft was putting on about Visual Studio 2010. The presenter had to stop several times to ask people to mute their lines. Now here's the thing - if you're the person who's stopped paying attention to the call and is now typing, chatting, or playing video games, you're not going to notice the presenter asking people to mute. So he had to contend with a metric-crap ton of background noise. As an audience member, it was extremely frustrating to try and filter out the noise from the presenter's message, and to be honest, I can't remember much of what he talked about.

When I give remote demos, I have the ability to mute people remotely when stuff like this happens. The program I use (GoToMeeting) also has a chat window. So if there's a lot of background noise coming from a particular participant, I'll say "Ok, folks, it sounds like -insertPersonNameHere- has a really sensitive microphone that's picking up a lot of background noise. I'm going to mute them, but if they have questions, they'll be able to let me know via the chat window, and I'll unmute them."

So make sure you have the ability to mute people remotely. It will only make your presentations run smoother both for yourself, and for your audience.

Friday, March 19, 2010

Learning Regular Expressions

During the course of your automated test efforts, you'll come across regular expressions. Regular expressions are an extremely powerful tool, but they're daunting when you're first learning them. I mean, really, can you just look at this line of code and tell me what it does?

Fortunately the good folks over at Regular-Expressions.info have put together a great tutorial that explains regular expressions in detail. You'll learn what regular expressions are good for and how to write them. This is an extremely useful skill for an automator. You can perform more robust verifications with your tests, and you can use them to do other tasks like pull particular strings out of log files, or ensure email addresses are properly formatted.

It's also handy to have a tool that you can use to verify your expressions are working properly. For that, I recommend Roy Osherove's Regulator. It's free, and allows you to test your regular expression against a variety of inputs, allowing you to make sure you'll correctly match the patterns you're expecting.

Happy Automating!

re = /^((4\d{3})|(5[1-5]\d{2}))[ -]?(\d{4}[ -]?){3}$|^(3[4,7]\d{2})[ -]?\d{6}[ -]?\d{5}$/;The above regular expression is used to verify that a credit card number is valid. (This is also a great example of why you should comment your code.) Such an expression is very useful, but how the heck do you write these things?Fortunately the good folks over at Regular-Expressions.info have put together a great tutorial that explains regular expressions in detail. You'll learn what regular expressions are good for and how to write them. This is an extremely useful skill for an automator. You can perform more robust verifications with your tests, and you can use them to do other tasks like pull particular strings out of log files, or ensure email addresses are properly formatted.

It's also handy to have a tool that you can use to verify your expressions are working properly. For that, I recommend Roy Osherove's Regulator. It's free, and allows you to test your regular expression against a variety of inputs, allowing you to make sure you'll correctly match the patterns you're expecting.

Happy Automating!

Friday, March 12, 2010

Test Workflows, Not Features

So you've got a shiny new feature in your application. Maybe it's a new button, or a new context menu item. You get your new build, load it up, and click the button. It works - Huzzah! Test passed, move on to the next feature!

Whoa, slow down there, chief. You've verified that the feature works in isolation, but how will your end users be using this button? Will they be clicking this button all by itself every time? Chances are there's a whole workflow that this button will be involved with, and you need to make sure you understand what that workflow is. So figure out what your users are going to do with this feature, and how they're going to do it. What other steps will they be performing before using this feature? What options could they set that would impact how this feature behaves?

Only when you have a good grasp on how the new feature will actually be used can you say whether or not it's working properly. Design your test cases to reflect real world behavior, and your application will be much more solid when it ships.

Whoa, slow down there, chief. You've verified that the feature works in isolation, but how will your end users be using this button? Will they be clicking this button all by itself every time? Chances are there's a whole workflow that this button will be involved with, and you need to make sure you understand what that workflow is. So figure out what your users are going to do with this feature, and how they're going to do it. What other steps will they be performing before using this feature? What options could they set that would impact how this feature behaves?

Only when you have a good grasp on how the new feature will actually be used can you say whether or not it's working properly. Design your test cases to reflect real world behavior, and your application will be much more solid when it ships.

Friday, March 5, 2010

Forcing a BSOD

The Blue Screen of Death is one of the most unpleasant events a person can face. It ranks right up there with doing your income taxes, the unexpected visit from your least favorite relative, and hemmorhodial flare ups. However, there are times when you actually want to have a BSOD event happen. Maybe you're doing some extreme failover testing, or maybe you want to show how your software can recover from a disastrous situation.

If that's the case, then you need a consistent way to force a BSOD. The steps below show you how to do it:

When you want to force a BSOD, press and hold the [Ctrl] key on the right side of your keyboard, and then press the [ScrollLock] key twice. The BSOD will appear.

Note: If your system reboots instead of displaying the BSOD, you'll have to disable the Automatically Restart setting in the System Properties dialog box. To do so, follow these steps:

If that's the case, then you need a consistent way to force a BSOD. The steps below show you how to do it:

- Go to Start>Run, and launch the Registry Editor (Regedit.exe).

- Go to HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\i8042prt\Parameters.

- Go to Edit, select New | DWORD Value and name the new value CrashOnCtrlScroll.

- Double-click the CrashOnCtrlScroll DWORD Value, type 1 in the Value Data textbox, and click OK.

- Close the Registry Editor and restart your system.

When you want to force a BSOD, press and hold the [Ctrl] key on the right side of your keyboard, and then press the [ScrollLock] key twice. The BSOD will appear.

Note: If your system reboots instead of displaying the BSOD, you'll have to disable the Automatically Restart setting in the System Properties dialog box. To do so, follow these steps:

- Press [Windows]-Break.

- Select the Advanced tab.

- Click the Settings button in the Startup And Recovery panel.

- Clear the Automatically Restart check box in the System Failure panel.

- Click OK twice.

Friday, February 26, 2010

Intepreter

In the movie "The Fifth Element" Bruce Willis turns to another character and says, "Lady, I only speak two languages - English and Bad English."

And those are exactly the two languages we all need to speak in order to succeed, whether we're testers, developers, sales people or executives. The first is to ensure that we can communicate our ideas clearly and effectively to our audiences. The second is so we can understand and interpret what our customers, co-workers, and others are saying.

Now understand, when I say Bad English, I'm not referring to people who use dangling modifiers, end sentences with prepositions, or any of the other Grammatical Cardinal Sins that your 10th grade teacher warned you about. I'm refering to the rambling stream of consciousness email messages that some people send; the people who speak only in acronyms; the people who use obscure regional slang. In short, I'm saying you need to speak the language of the people who *can't* communicate effectively, so that you yourself *can*.

If you can read a confusing email message, interpret it correctly, clarify it, and then re-communicate it, you'll be able to help others get their messages across, and you'll be able to get your own messages across more effectively because you'll understand how these people think. How many times during a sales cycle or a development cycle have you seen other people bounce emails back and forth, never quite getting their points across, because they weren't speaking each other's languages? I've seen that more times than I can count, and it's a frustrating experience.

So how do you start becoming an interpreter of Bad English? For starters, you need to understand the context of the user's message. What feature or service are they talking about? Are there keywords in their message that might shed some light on what they're looking to do? Put the message in your own words, using the simplest possible language. Once you've done that, run it by the original sender to make sure you've understood correctly. If they say yes, great. If they say no, they'll elaborate on their situation. Don't worry about getting it right the first time; people will appreciate that you're trying to help them.

There are tons of reference materials out there on how to communicate effectively - just poke around on Amazon. If you want examples of Bad English, just ask your support team to show you the messages they receive every day. It's amazing how little detail or thought people will put into a message that's asking for help. It will show you how your users think, and that will help you communicate more effectively with them.

This same principal applies to any actual language - French and Bad French, Spanish and Bad Spanish, etc. The important thing is that you learn to understand what people are saying, and are able to respond to them. The other piece to this is that you're able to effectively communicate and convey your own ideas. Once you can do that, you're one step farther on the path to success in your field.

And those are exactly the two languages we all need to speak in order to succeed, whether we're testers, developers, sales people or executives. The first is to ensure that we can communicate our ideas clearly and effectively to our audiences. The second is so we can understand and interpret what our customers, co-workers, and others are saying.

Now understand, when I say Bad English, I'm not referring to people who use dangling modifiers, end sentences with prepositions, or any of the other Grammatical Cardinal Sins that your 10th grade teacher warned you about. I'm refering to the rambling stream of consciousness email messages that some people send; the people who speak only in acronyms; the people who use obscure regional slang. In short, I'm saying you need to speak the language of the people who *can't* communicate effectively, so that you yourself *can*.

If you can read a confusing email message, interpret it correctly, clarify it, and then re-communicate it, you'll be able to help others get their messages across, and you'll be able to get your own messages across more effectively because you'll understand how these people think. How many times during a sales cycle or a development cycle have you seen other people bounce emails back and forth, never quite getting their points across, because they weren't speaking each other's languages? I've seen that more times than I can count, and it's a frustrating experience.

So how do you start becoming an interpreter of Bad English? For starters, you need to understand the context of the user's message. What feature or service are they talking about? Are there keywords in their message that might shed some light on what they're looking to do? Put the message in your own words, using the simplest possible language. Once you've done that, run it by the original sender to make sure you've understood correctly. If they say yes, great. If they say no, they'll elaborate on their situation. Don't worry about getting it right the first time; people will appreciate that you're trying to help them.

There are tons of reference materials out there on how to communicate effectively - just poke around on Amazon. If you want examples of Bad English, just ask your support team to show you the messages they receive every day. It's amazing how little detail or thought people will put into a message that's asking for help. It will show you how your users think, and that will help you communicate more effectively with them.

This same principal applies to any actual language - French and Bad French, Spanish and Bad Spanish, etc. The important thing is that you learn to understand what people are saying, and are able to respond to them. The other piece to this is that you're able to effectively communicate and convey your own ideas. Once you can do that, you're one step farther on the path to success in your field.

Friday, February 19, 2010

Perfomance Testing 101 - 4

The last thing I want to talk for performance testing about is tools. There are a lot of great tools out there, both commercial and open source. Do some Googling to see what's available, and what other people think of those tools.

Spend some time evaluating tools before you make a decision. Make sure that the tools can provide the information you need in the format you want before you pull the trigger.

While you're running your load tests, you're going to need to keep tabs on your server's behavior. Make sure that the tool you're working with can monitor the server's resources as unobtrusively as possible. If your tool of choice doesn't have server monitoring capabilities, then you can use a tool built into Windows called Perfmon. Perfmon lets you keep tabs on many things, but at the miniumum, you'll want to track CPUUsage, Available MBytes (the total amount of RAM available on the system), Bytes Sent and Bytes received. This will give you a flavor for how your system's doing overall. You can also track items related to SQL databases or the .NET framework, so look through the list of what's available and see what meets your needs.

As you can see, there's a lot involved with performance testing, and these posts have just touched the surface. The thing to remember here is that performance testing is *very* different from functional testing. You'll need to learn the difference between a 302 and a 304 http return code. You'll need to understand what parts of your application uses system resources and why. If you've never done performance testing, be ready for a pretty steep learning curve. Make sure you budget time, whether it's on or off the clock, to learn as much as you can.

I'm not a performance testing expert by any means, so now I'll point you to the people who are :)

Corey Goldberg has a great blog and builds performance testing tools. Definitely check him out:

http://coreygoldberg.blogspot.com/

Twitter: @cgoldberg

Scott Barber is a perfomance testing guru:

http://www.perftestplus.com/scott_blog.php

Bob Dugan is an extremely smart performance tester and a great guy to work with:

http://www.stonehill.edu/x8127.xml

Here are a couple of helpful books on performance testing:

Performance Solutions: A Practical Guide to Creating Responsive, Scalable Software

http://www.amazon.com/Performance-Solutions-Practical-Creating-Responsive/dp/0201722291

Performance Testing Microsoft .NET Web Applications

http://www.amazon.com/Performance-Testing-Microsoft-Applications-Pro-Developer/dp/0735615381/ref=sr_1_2?ie=UTF8&s=books&qid=1266416753&sr=8-2-spell

Spend some time evaluating tools before you make a decision. Make sure that the tools can provide the information you need in the format you want before you pull the trigger.

While you're running your load tests, you're going to need to keep tabs on your server's behavior. Make sure that the tool you're working with can monitor the server's resources as unobtrusively as possible. If your tool of choice doesn't have server monitoring capabilities, then you can use a tool built into Windows called Perfmon. Perfmon lets you keep tabs on many things, but at the miniumum, you'll want to track CPUUsage, Available MBytes (the total amount of RAM available on the system), Bytes Sent and Bytes received. This will give you a flavor for how your system's doing overall. You can also track items related to SQL databases or the .NET framework, so look through the list of what's available and see what meets your needs.

As you can see, there's a lot involved with performance testing, and these posts have just touched the surface. The thing to remember here is that performance testing is *very* different from functional testing. You'll need to learn the difference between a 302 and a 304 http return code. You'll need to understand what parts of your application uses system resources and why. If you've never done performance testing, be ready for a pretty steep learning curve. Make sure you budget time, whether it's on or off the clock, to learn as much as you can.

I'm not a performance testing expert by any means, so now I'll point you to the people who are :)

Corey Goldberg has a great blog and builds performance testing tools. Definitely check him out:

http://coreygoldberg.blogspot.com/

Twitter: @cgoldberg

Scott Barber is a perfomance testing guru:

http://www.perftestplus.com/scott_blog.php

Bob Dugan is an extremely smart performance tester and a great guy to work with:

http://www.stonehill.edu/x8127.xml

Here are a couple of helpful books on performance testing:

Performance Solutions: A Practical Guide to Creating Responsive, Scalable Software

http://www.amazon.com/Performance-Solutions-Practical-Creating-Responsive/dp/0201722291

Performance Testing Microsoft .NET Web Applications

http://www.amazon.com/Performance-Testing-Microsoft-Applications-Pro-Developer/dp/0735615381/ref=sr_1_2?ie=UTF8&s=books&qid=1266416753&sr=8-2-spell

Friday, February 12, 2010

Performance Testing 101 - 3

Now that we've talked about scenarios, the next thing I want to discuss is your performance testing environment.

Your environment should be an isolated, standalone network. All "normal" network activities like virus scans, automatic system updates and backups should be disabled. You don't want anything running that could skew your test results. Now, some people may question this, saying it's not a "real world" scenario. At this point, you're trying to see how fast your system can perform under the absolute best conditions. It's a way to see, "ok, we are absolutely positive we can handle this number of transactions/second." My experience with this has been if you have other things running, and the numbers come back bad, people will immediately jump on that as the cause. "Oh, you had a virus scanner running - that's probably messing up the results. Uninstall the scanner and run the tests again" If your tests take days to run, that's not what you want to hear, especially if the test results come back the same as they were before.

Having your tests on an isolated network also ensures that normal day to day traffic isn't skewing your results. I once had four hours' worth of testing negated because someone three cubes down started downloading MSDN disks. I wound up having to do my tests at night after everyone had gone home in order to ensure there wasn't any rogue traffic. Again, having an isolated network will save you a lot of testing time and frustration in the long run.

The other thing to keep in mind is that your tests systems should match up to what you'll be using in production. So whatever hardware your system will run in the real world is what you should be testing with. Some IT folks balk at this, citing cost as a problem. Thing is, there are issues you won't be able to find if you're just testing with a bunch of hand-me-down systems. If you're not running on a box that has the same type of processor, how will you know if you're taking full advantage of that processor's architecture? This extends beyond just the systems themselves. Switches, NICs, even the network cable involved can impact your tests' performance. Don't fall into the trap of "well, we'll be using better hardware in the field, so performance will be better", because it might not.

Your environment should be an isolated, standalone network. All "normal" network activities like virus scans, automatic system updates and backups should be disabled. You don't want anything running that could skew your test results. Now, some people may question this, saying it's not a "real world" scenario. At this point, you're trying to see how fast your system can perform under the absolute best conditions. It's a way to see, "ok, we are absolutely positive we can handle this number of transactions/second." My experience with this has been if you have other things running, and the numbers come back bad, people will immediately jump on that as the cause. "Oh, you had a virus scanner running - that's probably messing up the results. Uninstall the scanner and run the tests again" If your tests take days to run, that's not what you want to hear, especially if the test results come back the same as they were before.

Having your tests on an isolated network also ensures that normal day to day traffic isn't skewing your results. I once had four hours' worth of testing negated because someone three cubes down started downloading MSDN disks. I wound up having to do my tests at night after everyone had gone home in order to ensure there wasn't any rogue traffic. Again, having an isolated network will save you a lot of testing time and frustration in the long run.

The other thing to keep in mind is that your tests systems should match up to what you'll be using in production. So whatever hardware your system will run in the real world is what you should be testing with. Some IT folks balk at this, citing cost as a problem. Thing is, there are issues you won't be able to find if you're just testing with a bunch of hand-me-down systems. If you're not running on a box that has the same type of processor, how will you know if you're taking full advantage of that processor's architecture? This extends beyond just the systems themselves. Switches, NICs, even the network cable involved can impact your tests' performance. Don't fall into the trap of "well, we'll be using better hardware in the field, so performance will be better", because it might not.

Friday, February 5, 2010

Performance Testing 101 - 2

Ok, so now that we've taken care of terminology and gotten our performance baselines, it's time to start thinking about configuring our scenarios. This is where you figure out how many people will be performing a given action simultaneously. Again, if you are testing a pre-existing website, you can go through your web server's logs to see how many people typically access a given page at a time.

If you're testing a never-before released site, though, this part gets tricky. You'll need to make some educated guesses around your user's behavior. Let's say we're testing an e-commerce site, and we're going to roll it out to 1,000 users. Let's further assume that these users are equally divided between the east and west coasts of the USA (500 on each coast). For test cases, we want to perform logins, searches, and purchases.

Now, the knee-jerk reaction is to say that since you have 1,000 users, your system should be able to handle 1,000 simultaneous logins. That may be true, but is it a realistic scenario? In the real world, your users are separated by time zones, some of them may not be interested in purchasing a product today, some may be on vacation, some of them will only shop while they're on lunch break, and others will only shop at night after their kids have gone to bed. So in considering this, you may want to start out verifying that your system can handle 250 or 300 users logging in simultaneously. Then build up from there.

The next thing to consider is the frequency of the actions. Each user logs into the site once, but once they're in, how many times do they perform a search? If you go to Amazon, how many products do you search for in a single session? Between physical books, eBooks, movies and toys, I'd say I probably search for 2 or 3 items each time I log in. So that means searches are performed 2 or 3 times as often as logins. So if you're planning on having 250 - 300 users login to start with, then you're looking at 500 - 900 searches. Also consider the purchase scenario - not everyone that logs in and searches is going to buy something; Maybe half of the people, or a third of the people who login will actually make a purchase. So you're looking at 75 - 150 purchases for a scenario there.

So take some time and think about your end user's behavior when defining scenarios for load testing - don't make it strictly a numbers game.

Next week, we'll talk about your performance testing environment.

If you're testing a never-before released site, though, this part gets tricky. You'll need to make some educated guesses around your user's behavior. Let's say we're testing an e-commerce site, and we're going to roll it out to 1,000 users. Let's further assume that these users are equally divided between the east and west coasts of the USA (500 on each coast). For test cases, we want to perform logins, searches, and purchases.

Now, the knee-jerk reaction is to say that since you have 1,000 users, your system should be able to handle 1,000 simultaneous logins. That may be true, but is it a realistic scenario? In the real world, your users are separated by time zones, some of them may not be interested in purchasing a product today, some may be on vacation, some of them will only shop while they're on lunch break, and others will only shop at night after their kids have gone to bed. So in considering this, you may want to start out verifying that your system can handle 250 or 300 users logging in simultaneously. Then build up from there.

The next thing to consider is the frequency of the actions. Each user logs into the site once, but once they're in, how many times do they perform a search? If you go to Amazon, how many products do you search for in a single session? Between physical books, eBooks, movies and toys, I'd say I probably search for 2 or 3 items each time I log in. So that means searches are performed 2 or 3 times as often as logins. So if you're planning on having 250 - 300 users login to start with, then you're looking at 500 - 900 searches. Also consider the purchase scenario - not everyone that logs in and searches is going to buy something; Maybe half of the people, or a third of the people who login will actually make a purchase. So you're looking at 75 - 150 purchases for a scenario there.