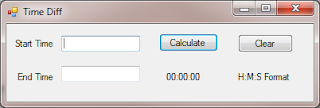

This was a frustrating experience, and one that was prone to human error. So I wrote a little C# app that allowed me to enter two times and see the difference between them. This let me note the test durations much faster and more precisely. To build your own time diff tool, fire up Visual Studio and create a form that looks like this:

For reference, I named the Start Time field txtStart, the End time field txtStop, the Calculate button is cmdCalculate, and the Clear button is cmdClear. I was lazy and left the label that you see as 00:00:00 as label1.

Now, use the following code for the calculate button:

private void cmdCalculate_Click(object sender, EventArgs e)

{

if ((txtStart.Text != "") && (txtStop.Text!=""))

{

DateTime start = DateTime.Parse(txtStart.Text);

DateTime stop = DateTime.Parse(txtStop.Text);

TimeSpan duration = new TimeSpan();

duration = stop - start;

label1.Text = duration.ToString();

}

}

Here's the code for the Clear button:

private void cmdClear_Click(object sender, EventArgs e)

{

txtStart.Text = "";

txtStop.Text = "";

label1.Text = "00:00:00";

}

And that's it. You now have a little app that will calculate the differences in time for you. One thing to note here is that the app isn't intended to diff times that are greater than 24 hours, and you have to enter the times in a 24 hour clock format. So if your test ended at 2 pm, you'd enter 14:00:00.

This is a nice example of creating a tool that augments manual testing. It doesn't do any testing on its own, but it does let a tester perform a given activity faster and more efficiently. Always be looking for little opportunities like this, and your testers will thank you for them.